What We Learned Building A Call Summary App with GPT-4

Note: This article was originally published on Medium when we launched LazyNotes. For context, read more about who we are here and here.

Many of you who work with Swift know that we are doers by default. Rather than follow the typical VC schedule of back-to-back meetings all day, we enjoy rolling up our sleeves and taking on projects that help our portfolio companies or make us more informed about their space.

To that end, when OpenAI first debuted GPT-3 a few years ago we launched a fun-yet-useful app called NamingMagic.com to test the new model. We didn’t anticipate that thousands of people a day would use it. We did, however, learn a lot about the costs and limits of using OpenAI’s APIs!

More recently, we started using GPT-4 paired with OpenAI’s Whisper model to analyze audio from pitch calls. By way of background, we were not satisfied with several third party solutions designed for this task. We didn’t need distracting features like long transcripts, audio playback, and annotations. We needed one thing done really well — a highly accurate and customized summary of each call in our CRM. (The same thing we do with pen and paper already). With GPT-4 out of the box, we continue to be astounded at how effective it is at that core task of capturing important details. Our notes got an order of magnitude better overnight.

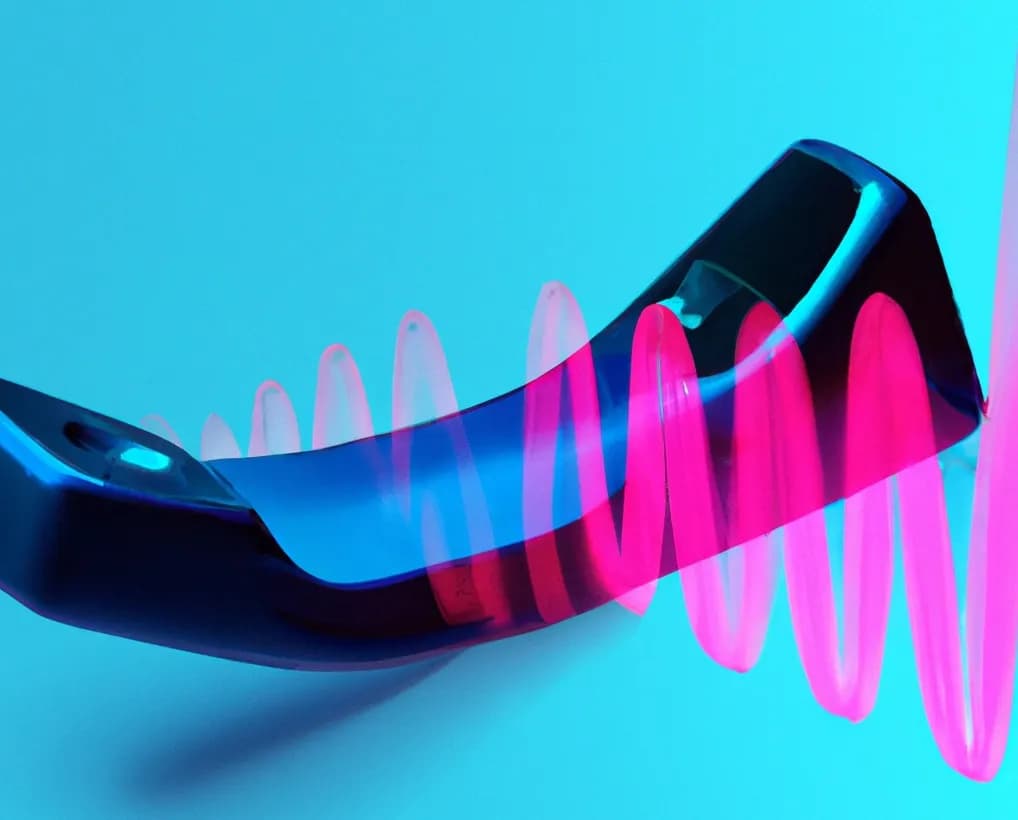

The app we developed is so useful, in fact, that we are now making it available in iOS in the App Store for everyone to use. Meet LazyNotes! It’s great for any repetitive meeting where good notes are important, and it’s already being used in the real world by use cases we didn’t envision. Several construction contractors use the tool now to record notes on job sites, for example.

We hope LazyNotes is useful, but that’s not the point — this post is about the journey, not the destination. Informed by this experience and our work with companies in the space, here are a few broader lessons on building apps with foundation models that might be useful to startups or enterprises planning their own production deployments of LLMs.

Lesson 1: GPT-4 Is Really Badass at Summarization and Classification But Is Still a Work In Progress At a Few Tasks

Our prompt consists of about eight questions that we try to answer in every call (product, team, traction, deal terms, etc.) in free-form. The results are consistently great. With other solutions, often very important things like numbers and names get garbled. Now, we now feel liberated to put our pencils down and engage with the speaker.

To be fully transparent, however, not everything is perfect (yet). There are still limitations on what the AI can do to decipher weird company names, and more nuanced questions — like “how engaging is the speaker” — don’t yield good answers. Still, the results are impressive and mirror the early success many are seeing with other LLM use cases like retrieval augmented generation or even chat-to-purchase.

Lesson 2: Coding with GPT-4 Still Isn’t Easy, But Is Drastically Accelerated

When GPT-4 was first released, we were in awe of its coding capabilities. In fact, we were even telling people that coding assistants would let anyone code and that there might end up being a flood of new software on the market (incidentally, this app might be considered evidence of that phenomenon).

But after spending some time using GPT-4 for coding this app, we are reminded that there are still a lot of limitations. GPT-4 is an excellent assistant for the most part. LazyNotes is coded in Apple’s Swift (yay) UI language and frankly the documentation is pretty lacking in detail and examples. GPT-4 was essential in unblocking common tasks. We didn’t need it to tell us how to make our if’s and then’s, but syntax of fringe languages proved to be a really good use case for a code assistant. The limitations became clear when the coding tasks were more Apple-y and not well documented. GPT-4 would often produce “ideas” for how to fix issues that didn’t work or missed important details. Furthermore, we were reminded how much time is actually spent on technical details outside of the actual code — setting up servers and cloud services, configuring Xcode settings, dealing with Apple’s review process or OpenAI’s limits — that end up slowing the development process. In truth, getting a real app built up is still hard once you get beyond the prototype stage.

Lesson 3: There Is a Lot More To a Company Than the Product

Once you get your app up and running, so what? How does anyone know about it? Does anyone care? What’s the business model? Do users get frustrated and leave? Now that it’s easier than ever to make amazing software, everything else — like sales, marketing, and customer support — becomes even more important. This is also a word of caution to new founders who are energized by the rapid prototype they just built. The prototype phase is like the first date, and there are lots of ups and downs around the corner. You may want to spend some time selling and marketing your new idea before being locked into a venture deal.

Lesson 4: LLM Ecosystem Tools: Are They Needed?

The last observations are about the LLM tool ecosystem. In the simple app we created, we didn’t need to leverage any prompt tools, vector databases, LLM memory or even LLM observability tools that are currently emerging to support the gen-AI ecosystem. But should this type of app scale, our first stop would likely be better observability to ensure our customer experience is acceptable. Secondly, new LLMOps tools could help as we test new prompts and versions of models as they come available. Vector stores and memory could also be useful if we want to search or chat with transcripts (ex. “who was that company we met that did XYZ?”).

While many new apps may be able to get away with the immediate novelty of GPT-4, eventually successful users of LLM models will need to reach for some of these essentials.

Conclusion

In summary, we have a new tool in our Swift Ventures toolkit and a lot of great learnings to help inform our investments and help founders. But maybe the best learning of all is that it’s still as hard as ever to build a transformational company.